Techno-Optimism and e/acc vs. d/acc

Hello again, everyone, and welcome to all new subscribers!

This is the Web3 + AI newsletter, where we explore the intersection of blockchain and artificial intelligence. Today's edition is a bit different since it is dedicated to the latest major concepts and ideas that have been pushing the technology world forward. It comes out on the first birthday of the release of ChatGPT - the event that fueled many of the debates I examine below.

Thank you for being here! Let's dive in!

Will Regulating AI Threaten Web3 Innovation?

A couple of weeks ago, I was a guest writer at Track My Token with a piece on AI regulation and how it may affect small-scale innovation, like the one taking place in Web3. I was particularly interested in exposing the most vocal people who instill fears of AI-powered human extinction into society and call for urgent regulation, while simultaneously benefiting from the panic they have manufactured. Those same people are the ones tailoring the future legislation, whereas, I argue, small startup founders should also get a seat at the negotiation table. Crypto founders especially have a lot to offer in terms of privacy and security-preserving, and data immutability solutions. Take a look at my article here and let me know what you think.

Meanwhile, it seems like some AI doomers have begun to realize the negative effects of their alarmist rhetoric and the ongoing regulating efforts it had triggered. Nick Bostrom, a philosopher who has been perpetuating AI's existential risks and dangers for more than 20 years, now worries these concerns may lead the world into technological stagnation. “It would be tragic if we never developed advanced artificial intelligence,” Bostrom said.

It is high time that we as a society soberly and objectively assess the degree of risk of these two scenarios. Because we have quite recently seen what a mistrust toward technological advances means amid a global pandemic.

Marc Andreessen's Techno-Optimism

Fortunately, prominent tech leaders have been and keep countering the naysayers. Let me give you some context. Back in October, as a response to the above-mentioned choir of sinister opinions on AI, Marc Andreessen released “The Techno-Optimist Manifesto”. Andreessen, a veteran Silicon Valley entrepreneur and Venture Capital investor, including in Web3 and AI, has consistently tried to calm the panic around AI by demonstrating that technology-induced paranoia has been irrational historically. Moreover, like myself, he argued:

This moral panic is already being used as a motivating force by a variety of actors to demand policy action – new AI restrictions, regulations, and laws. These actors, who are making extremely dramatic public statements about the dangers of AI – feeding on and further inflaming moral panic – all present themselves as selfless champions of the public good.

But are they?

I invite you to read the full article “Why AI Will Save the World” published in June 2023. It is enlightening!

Evidently, that piece failed to tame the AI hysteria, so Andreessen decided to go one step further with his Manifesto. In it, he both exposes and dismisses all attempts to denigrate technology and to decelerate progress and scientific advances. The manifesto starts with these words:

We are being lied to.

We are told that technology takes our jobs, reduces our wages, increases inequality, threatens our health, ruins the environment, degrades our society, corrupts our children, impairs our humanity, threatens our future, and is ever on the verge of ruining everything.

And continues to say:

Our civilization was built on technology.

Our civilization is built on technology.

Technology is the glory of human ambition and achievement, the spearhead of progress, and the realization of our potential.

For hundreds of years, we properly glorified this – until recently.

I am here to bring the good news.

We can advance to a far superior way of living, and of being.

We have the tools, the systems, the ideas.

We have the will.

It is time, once again, to raise the technology flag.

It is time to be Techno-Optimists.

Once again, Andreessen pays special attention to AI:

We believe Artificial Intelligence is our alchemy, our Philosopher’s Stone – we are literally making sand think.

We believe Artificial Intelligence is best thought of as a universal problem solver. And we have a lot of problems to solve.

We believe Artificial Intelligence can save lives – if we let it. Medicine, among many other fields, is in the stone age compared to what we can achieve with joined human and machine intelligence working on new cures. There are scores of common causes of death that can be fixed with AI, from car crashes to pandemics to wartime friendly fire.

We believe any deceleration of AI will cost lives. Deaths that were preventable by the AI that was prevented from existing is a form of murder.

We believe in Augmented Intelligence just as much as we believe in Artificial Intelligence. Intelligent machines augment intelligent humans, driving a geometric expansion of what humans can do.

We believe Augmented Intelligence drives marginal productivity which drives wage growth which drives demand which drives the creation of new supply… with no upper bound.

And one last quote we should specifically ponder on:

We believe that we are, have been, and will always be the masters of technology, not mastered by technology. Victim mentality is a curse in every domain of life, including in our relationship with technology – both unnecessary and self-defeating. We are not victims, we are conquerors.

I encourage you to read the full Manifesto as well, since it echoed widely across the whole technology, including Web3, world. It spurred a lively discussion and received many responses.

The e/acc Movement

Actually, Andreessen's Techno-Optimism builds upon another techno-philosophical movement called e/acc, or Effective Accelerationism. It was coined by Beff Jezos and Bayeslord back in 2022, again as a counterpoint to the impetus to regulate AI. It draws from Nick Land's accelerationism theories, although they are much more dystopian and radical in their assumption that technological acceleration will lead to capitalism's destruction. e/acc, rather, is fundamentally optimistic, and probably that is why it has been gaining numerous new followers over the last months.

By and large, e/acc argues that humanity should embrace and seek to accelerate technological progress, especially in AI, because, first, it is inevitable, and, second, it is the key to a post-scarcity world. The e/acc proponents believe that technological innovation fueled by capital, or what they refer to as techno-capital, is capable of solving all of humanity's problems. AI, in particular, should be able to battle climate change, diseases, and poverty, if enough investment and research are put into it. Or as Andreessen chimes in:

We believe that there is no material problem – whether created by nature or by technology – that cannot be solved with more technology.

Furthermore, these e/acc ideas arise in opposition to the concepts of degrowth and deceleration, which, in turn, advocate that only economic shrinking and anti-consumerism could prevent environmental disasters and save humanity. They defend the theory that the Earth does not have enough resources to feed an ever-growing human population, which resulted in the disastrous one-child policy in China. Meanwhile, it has been empirically proven that birth rates naturally decline as people rise out of poverty and as girls get more educated.

ICYMI - Physics of e/acc - Remastered

— Beff Jezos — e/acc ⏩ (@BasedBeffJezos) September 24, 2023

Cleaned up audio/video version of the talk from @AGIHouseSF now available: https://t.co/SGhPGu218P pic.twitter.com/HENiB7zN8g

Over the last year, these same voices, which the e/acc community has nicknamed decels, or degrowthers, have been calling for stringent AI regulations and imposing an embargo on developing AI. A social media poll organized by Vitalik Buterin can serve as a good test of the degree of diffusion of these ideas:

In nine out of nine cases, the majority of people would rather see highly advanced AI delayed by a decade outright than be monopolized by a single group, whether it's a corporation, government or multinational body.

However, nobody gives any context on what will make the situation in a decade different, and what will prevent a monopolizing concentration of power from forming then. Similarly, no one comments on the practicalities of banning people from training AI models on their home computers.

On the contrary, e/acc argues that precisely heavy regulation would result in an even larger centralization of AI development. That way, if or when AGI is achieved, it will be concentrated in the hands of only a few actors, who will dispose of dangerous amounts of power. Moreover, it would be much more likely for rogue actors to seize advanced AI models. Rather, e/acc promotes free innovation and a healthy and competitive open-source community as the guarantee of a consistent and adequate oversight of AI development.

Check out the e/acc Substack to learn more about the e/acc vision.

Also, there is already a directory of projects that align with e/acc's principles, many of which combine Web3 and AI.

The Network State

I want to add a few more valuable resources for your reference, specifically Balaji Srinivasan's The Network State which to some extent served as an inspiration for the e/acc founders. It presents the idea that, just like digital businesses and currencies, we could build a digital community and later convert it into a real “ideologically aligned but geographically decentralized” state.

I invite you to read the full book here and watch the recently held Network State Conference here.

Here's the livestream for the Network State Conference (part 1 of 2). https://t.co/rRvHYOVTOq

— Balaji (@balajis) October 30, 2023

And here.

Here is part 1 of 2 of the Network State Conference livestream if you missed it. https://t.co/igmIC1XqIr

— Balaji (@balajis) October 30, 2023

I also recommend you listen to the Bankless episode “Can AI and Crypto save the US?”, which discussed many of the ideas I explore here, including the state of AI regulation and whether technology is neutral or not.

Effective Altruism

Bear with me, because it gets really interesting. Obviously, some of those degrowthers and doomers I mentioned above have founded their own unformal organization, named Effective Altruism (EA). They refuse to call themselves an "organization" and prefer using a "movement", but the establishment, funding, and functioning of EA are too intentional for it to be just a movement. Here is what I mean: EA was formed by some of the richest Silicon Valley male leaders including Elon Musk, Peter Thiel, Vitalik Buterin, and the biggest crypto villain of all time Sam-Bankman Fried. SBF was even one of their largest funders.

You may say that some of these persons are more likely to be categorized in the ranks of Techno-Optimism, instead of the degrowthers, and you would be right. But that is what makes EA so slick. Elon Musk was one of the most vocal "experts" urging for a halt to advanced AI development, only to launch his own AI venture.

EA promotes itself as a community of people “doing as much good as possible” and employing technology to fight the existential problems of our time. Humanity's most pressing issue, according to EA, is the potential AGI-powered extermination and apocalypse. However, their “ideology is now driving the research agenda in the field of artificial intelligence (AI), creating a race to proliferate harmful systems, ironically in the name of “AI safety”.” That is why they are extremely active in demanding the imposing of strict AI legislation.

It seems like EA and their ideas played a major role in the OpenAI vs. Sam Altman debacle, especially taking into account that Elon Musk and Peter Thiel, among others, are OpenAI's founders. Moreover, it appears EA is an organization created to serve somewhat as a disguise for billionaires pursuing their agendas despite everything and everyone.

Vitalik Buterin and his Techno-Optimism

A few days ago, Vitalik Buterin, co-founder and main driving force behind the Ethereum blockchain, published his take on Andreessen's Techno-Optimism. In it, he agrees, in principle, with Andreessen's enthusiasm about technological advancements and all the improvements they brought to people's lives:

the benefits of technology are really friggin massive, on those axes where we can measure it the good massively outshines the bad, and the costs of even a decade of delay are incredibly high.

Though, Vitalik is a bit more cautious in his reasoning than Andreessen. He argues that it was not simply “the invisible hand of the market” or “techno-capital machine” that boosted progress. Rather “intentional action, coordinated through public discourse and culture shaping the perspectives of governments, scientists, philanthropists, and businesses” also played a huge role. That statement gets a bit nuanced in light of Vitalik's EA membership and their extensive lobbying.

Unfortunately and very disappointingly for me, when talking about AI, Vitalik only perpetuates the familiar mantra:

But a superintelligent AI, if it decides to turn against us, may well leave no survivors, and end humanity for good.

What is more, he does that without giving any explanation or context of how and why AI would make such a decision and turn against us. The only “irrefutable proof” he cites, as many before him, is that, once AI becomes superintelligent, it would be unimaginable for a more intelligent creature to choose to obey a less intelligent one. Buterin even goes on to quote sci-fi novels and series as if they are convincing arguments, or even evidence proving his point.

e/acc vs. d/acc

Gladly, Buterin gives a much more moderate opinion on AI regulation that clearly distinguishes him from most of the EA figures:

This seems like an important fact to understand for anyone pursuing AI regulation. Current approaches have been focusing on creating licensing schemes and regulatory requirements, trying to restrict AI development to a smaller number of people, but these have seen popular pushback precisely because people don't want to see anyone monopolize something so powerful. Even if such top-down regulatory proposals reduce risks of extinction, they risk increasing the chance of some kind of permanent lock-in to centralized totalitarianism. Paradoxically, could agreements banning extremely advanced AI research outright (perhaps with exceptions for biomedical AI), combined with measures like mandating open source for those models that are not banned as a way of reducing profit motives while further improving equality of access, be more popular?

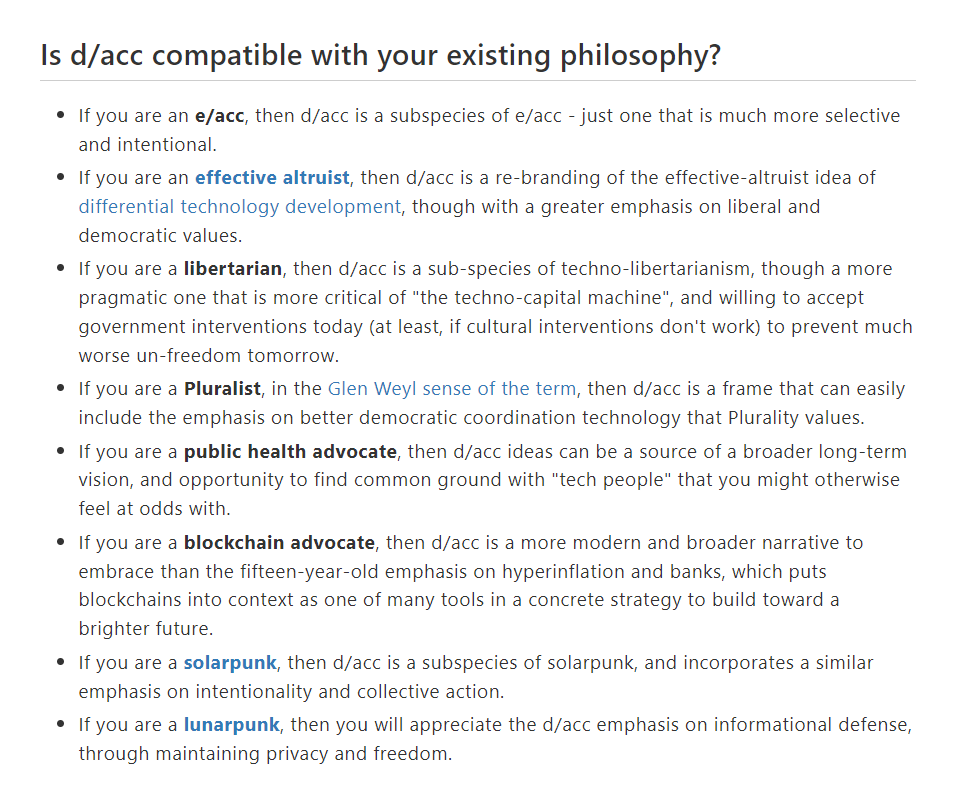

Vitalik also responds to the e/acc community by proposing d/acc, where d can stand for defensive, decentralization, democracy, or differential. He agrees that technological progress should be embraced, but argues that not every technology is worth being pursued. He has outlined the main differences between d/acc and other prominent ideologies, including those I discussed above, here:

Buterin also highlights blockchain and zero-knowledge-proofs-based solutions and adds:

These technologies are an excellent example of d/acc principles: they allow users and communities to verify trustworthiness without compromising privacy, and protect their security without relying on centralized choke points that impose their own definitions of who is good and bad.

Here is one final quote to put things into perspective. I hope you will read Vitalik's full article and share your thoughts with me.

Today, the AI safety movement's primary message to AI developers seems to be "you should just stop". One can work on alignment research, but today this lacks economic incentives. Compared to this, the common e/acc message of "you're already a hero just the way you are" is understandably extremely appealing. A d/acc message, one that says "you should build, and build profitable things, but be much more selective and intentional in making sure you are building things that help you and humanity thrive", may be a winner.

For everyone who reached this point, congratulations and thank you for reading! Believe it or not, it was not my intention to write a 3,000-word newsletter. Though, once I found myself in that rabbit hole, it was difficult to come out. Moreover, it was very important for me to outline the people who mold the world we live in today, and to help you recognize the principles and values that stimulate them.

Most of the ideologies I cited above are extreme and it would be ill-advised to apply them as is. However, there are a few aspects with which I can wholeheartedly agree. First and foremost, progress is indeed inevitable and we kid ourselves if we think we can contain it. Second, if we look at it objectively we will see that banning AI innovation or trying to postpone it simply won't work. And third, instead of prohibitions and overregulation, we should rely on open-sourcing AI research, democratizing access, and decentralizing power and control.

Disclaimer: None of this should or could be considered financial advice. You should not take my words for granted, rather, do your own research (DYOR) and share your thoughts to create a fruitful discussion.

Member discussion